Hello,

I’m running a study with 6 days of monitoring well-being, 3 of which include intervention messaging for an experimental group and control messaging for a control group. I’d like to randomize my participants into experimental and control groups then send the correct messages to each group.

I understand this can be done by applying criteria to the experimental and control messaging. However, as I understand it, criteria has to be based on a response given by the participant, so this solution is a bit tricky to implement. Has there been any updates on how to do randomization since the topic was last discussed? If I split the sample myself, can I assign the surveys to specific participants? Any advice you can offer is appreciated.

Greg

Hello Greg,

Thank you for contacting our support team.

At the moment, our system does not have the capability to automatically distribute participants into control and experimental groups. This functionality is planned for future updates. In the meantime, you can manually assign participants to specific sessions. To facilitate this, you can divide your sample and then use our dashboard to set up separate sessions for each participant group.

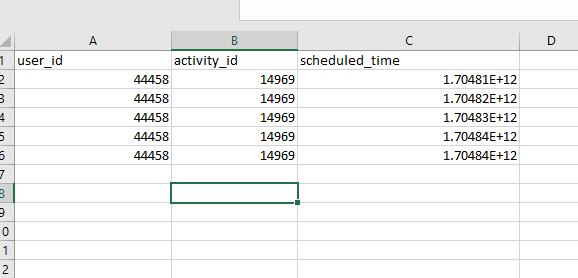

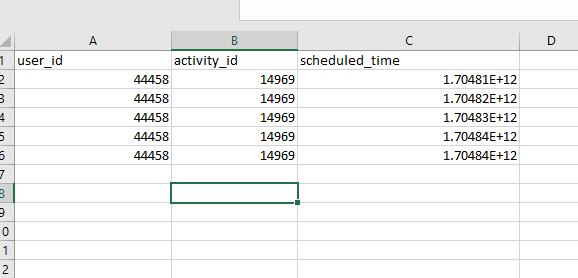

To proceed with this, you will require the participants’ IDs and activity IDs, which can be found on the Participation and Activities Page. To organize sessions for each group, please fill a CSV formatted as the attached image, and upload it through the ‘Create new sessions’ button found under the Activity Sessions Page, choosing the ‘Create via CSV file’ option. Be aware that the time for each session should be input as the number of milliseconds since epoch time.

We hope this information is useful to you. If you have any more questions or need additional assistance, please feel free to reach out to us.

Kind regards,

Milad

Hi Milad,

Thank you for the reply, this is helpful. Glad to hear that feature will be coming, that will certainly be useful.

A few follow-up questions.

-

Is this Unix EPOCH time? (e.g., https://currentmillis.com/). For example, if I wanted to schedule a survey on Feb. 7th at 12:30 EST, it would be 1707327000000?

-

A complexity with this solution is that timing can no longer be relative to a participant’s start date, so to implement this I will need to find each participant’s start date and timezone, and then manually input the timing for each survey for each participant. Is that correct?

-

Am I correct that I could schedule all 18 survey triggers in the same CSV by using different scheduled times and activity ids? Each user id will be assigned one activity id (experiment or control survey), they will get that activity 9 times at different scheduled times.

I will likely have more questions as I begin to try and implement this solution. Thanks again, Milad!

Hi Greg,

To answer your questions first:

- Yes. you are right.

- Again you are right. But I would rephrase your sentence like this “the complexity of this method is that the timing of the survey should be all managed outside of the platform”, i.e. it still will be relevant to each participant.

- You are again correct.

Now if I look at the problem you initially asked, the fact is Avicenna right now does not offer an easy solution for multi-arm (that’s embarrassing  ). Until we add it, there are multiple workarounds, each with its own complications.

). Until we add it, there are multiple workarounds, each with its own complications.

Workaround 1

You can create one study for each arm, e.g. “Control Study” and “Experimental Study”. Then randomize participants outside of the study, and ask them to join the arm they are randomly assigned to.

Advantage: Everything will be managed by the app.

Disadvantage: You will have two copies of your study. If the protocol across these two arms have lots of commonalities, there will be some repetitive work to implement the protocol twice.

Workaround 2 (My favorite)

Create one study, then in the baseline, ask a question along the lines of “Which arm you belong to?”. Then randomize participants outside of the study, and ask them to join the study and choose the proper arm when they are asked to.

Advantage: Everything will work by the app as you expect.

Disadvantage: You need to make sure people make the right choice when they are asked. This is not that hard honestly. You can simply monitor this based on the incoming data, and if someone incorrectly choose their arm, you can correct it.

P.S: Next week we will be releasing the feature to allow researchers modifying participants’ responses. That can help a lot if you choose this workaround.

Workaround 3

What @milad.moosavi suggested. You mentioned some of its complexities, and I agree. Overall, while this method works, I don’t think it’s an ideal solution given the complexities.

Let me know what you think.

Best,

Mohammad

Hi Mohammad,

Thanks very much for your feedback and suggestions. I think I will ultimately implement workaround 1. I’ll download the activities and import the definition files so I don’t need to build the surveys twice.

I’ll reach out if I run into further issues.

All the best,

Greg

1 Like

I think I will have success with the two-study solution in my case (workaround 1).

I had a small detail about workaround 2 I wanted to add.

You could have a section with information questions just prior to randomizing, which tells them to pay close attention to the picture as they will be asked to enter it on the next page. They are then shown a picture of either a 1, 2, or 3 (for a 3 condition study). Next, they are asked to select which number they were shown 1, 2, or 3, and you use that question as a criterion variable for condition-specific sections in your survey.

1 Like